Final Prototype

Interactive Motion Visualizer

For my final prototype, I am working on a live motion visualizer/game. The idea here is to create a 3D immersive experience for the user, in which the world is created by the user's movements, helping the user to visualize and understand the quality of his/her movements in space. I have drawn inspiration in this visualization from the theme of fluid and rigid structures to visualize jerky and fluid movements differently. This could provide a medium for movement-based expression to a person with ASD that is completely immersive: a real break from a neuro-typical environment where a user can be him/herself. Simultaneously, the user could be developing his or her sense of expressive movement.

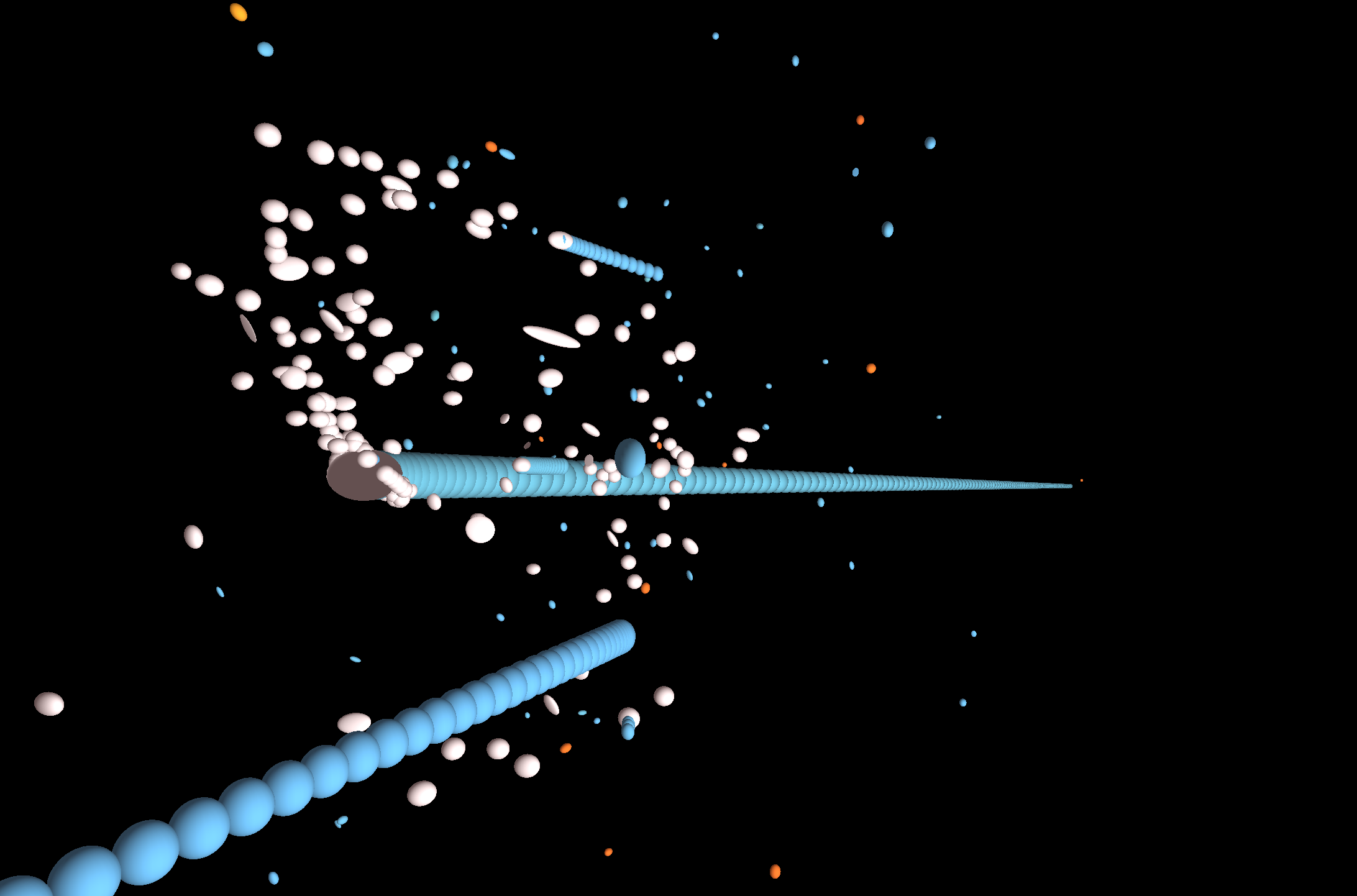

In this version, I am using WebGL to animate the environment and a MYO armband to capture the movement data. I have created a switch that uses direction shifts (using the dot product of velocity vectors) and jerk (the third derivative of position) to determine if a movement is jerky or smooth. Jerky movements are visualized with a blue string of spheres emerging from the position point proportional to the acceleration of the point in length and number of spheres. A rotating orange sphere emerges, spiraling around and away from the vector in cases of higher jerk. Fluid movements are visualized by a blue sphere separating from the position sphere and traveling with the instantaneous velocity observed at that positon. If the acceleration is high, a rotating orange sphere spirals off with it.

It is important to note that the visualization is not actual responding the absolute position of the user's arm in space. Accelerometers are an extremely unreliabile sensor for determining position relative to any external frame of reference because there is a huge amount of error in the double integration algorithms that build position from acceleration. This is limiting in the kinds of statistics that can be calculated on the movement. As a result, I am shifting my idea for the method for collecting data to the Kinect. I have included a Kinect in this video to help visualize the complete system. The use of a Kinect will provide more reliable, robust data to work with that will enable me to consider not only more aspects of the movement of individual points, but the movement of points on the body relative to each other. Imagine if the structures created by a person's movements were a direct extension of his/her body in space. What an exciting virtual world to play in.

I am also considering a shift toward a virtual reality system. I imagine a user immersed in a world of sculptures created by his or her movements live. I see the user being able to interact with the world intentionally, moving spheres that have been created or walking into them with physical body movements. What would be EVEN MORE EXCITING is the use of haptic feedback from the world. If the user presses against an edge, maybe a wearable device on his or her arms becomes more rigid to resist the movement against the edges of this imaginary world. To visualize this, I have included a kinect and the wearable prototype that I created in the above video.

I experimented with a non-spherical shape so that I could rotate the object into the direction of the motion. I have not yet figured out the right algorithm for that directionality, so the objects appear to be rotating randomly even though they are not.

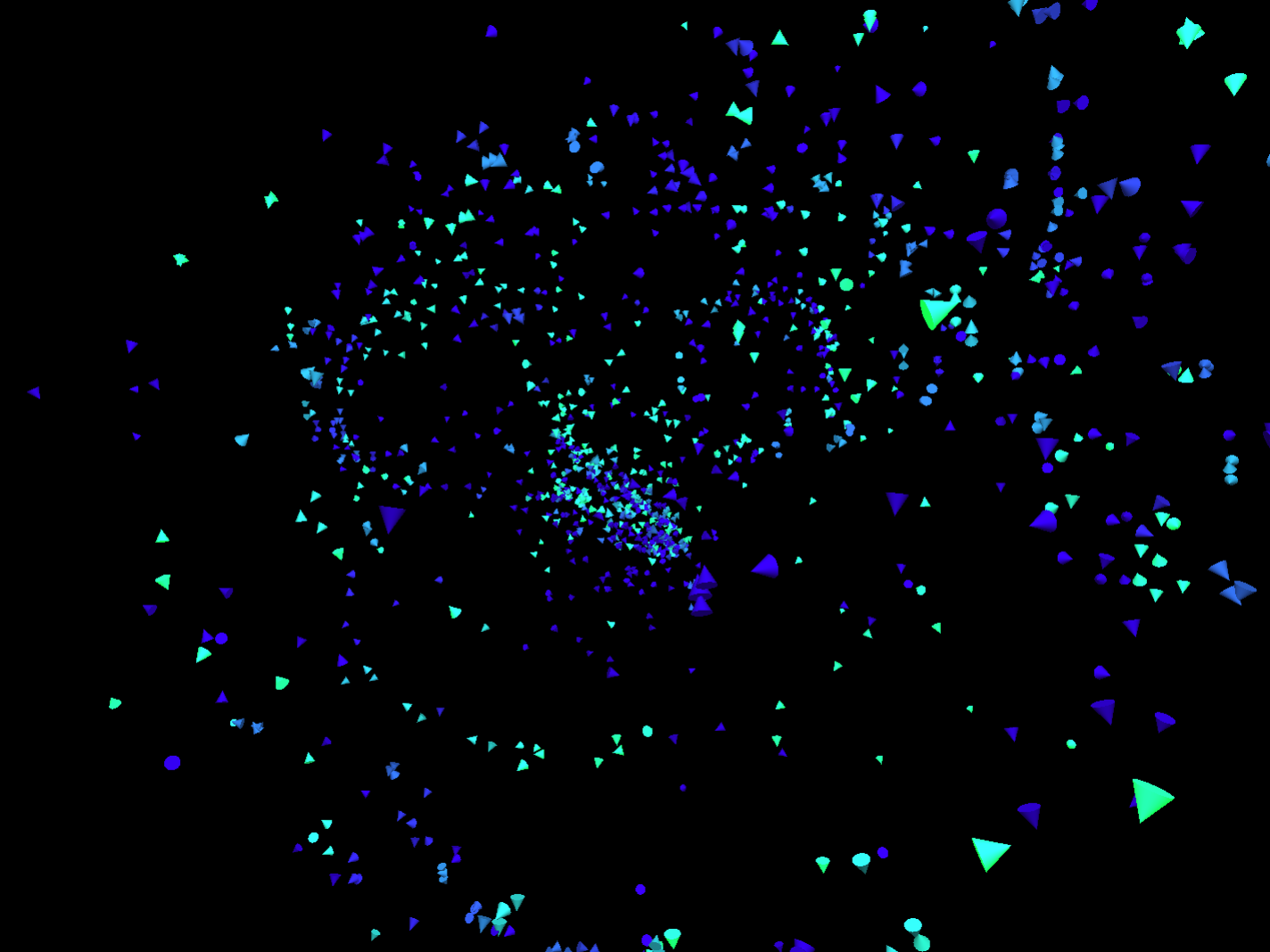

In this version, I have returned to spheres. The color of the spheres is determined by the emg sensors, so greener spheres represent moments of greater tension. I added two more spheres for each frame that are located a certain distance from the original sphere determined by the tension as well. These two spheres are rotating around the original sphere with a greater radius for less tension and a smaller radius for more tension. They are rotating with greater angular velocity if the center sphere was created with greater acceleration. Here is the animation:

I have not yet been able to gamify the interaction yet. I will work on this for next week. I plan to create a function so that you can jump to make all of the beads fall down. It would be great to see them get rebuilt. I will also try to improve position functionality so that you have more agency in creating your sculpture. I will try to also make it possible to move into and out of the sculpture. It will be very challenging to add emotional interpretation at this point. That will require a lot of work and probably some data analysis that I will have to learn over the summer. We'll see what I can accomplish.